The Rise and Fall of Object Relational Mapping

By Mark Rickerby

I want to apologise in advance for this talk being a little more incoherent than I’d like. My ideas on the subject of object relational mapping (ORM) are still evolving, but I wanted to put this out in front of people early-on in a rough and messy form and get feedback. If it makes sense (and I make no promise that it does) I might consider taking this research further, going deeper into the details both philosophically and technically. So please tell me what you think and whether you find it interesting!

The structure of this transcript follows the outline and flow of the original talk, along with all of the key slides and points. I’ve added additional details, links to primary sources and insights from discussions and comments after the talk. I’m certainly aware that there’s potentially holes and mistakes in the history here. It’s surprisingly hard to find material on the 1990s in technology. A lot of information about corporate software from that era has been buried or lost altogether. I still feel like I have a lot to learn and a lot still to understand.

This is speculative history

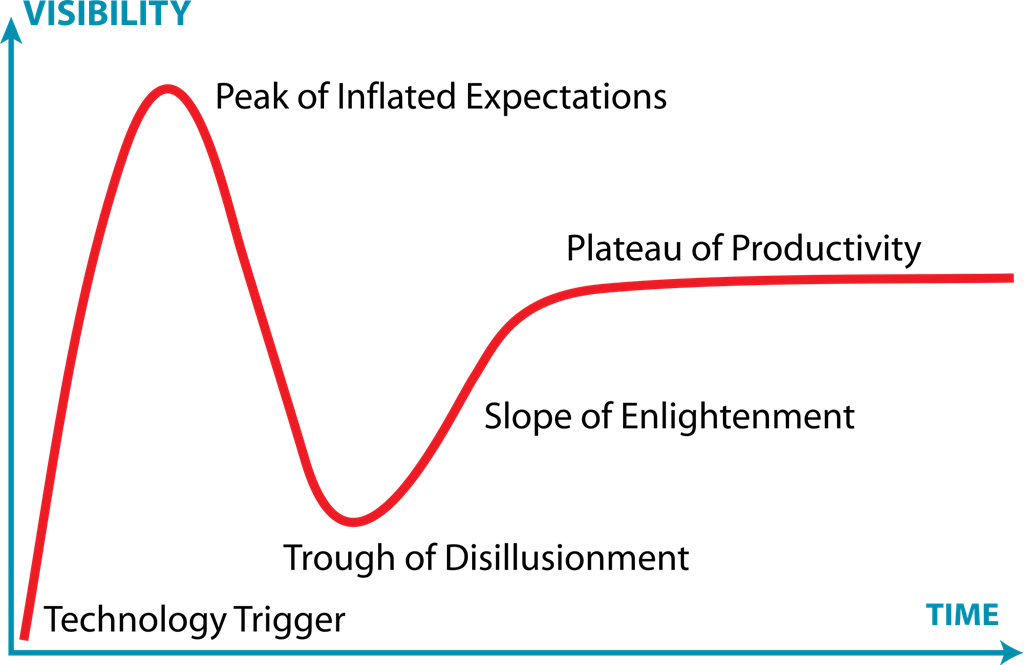

Many of you will be familiar with this chart. It’s the Gartner Hype Cycle, a rising and falling wave representing the highs and lows of technology adoption over time. To me, it illustrates the collision course of an emerging technology with a large mainstream audience.

Although it could easily be used as a simple and entertaining representation of the story of object relational mapping, I want to reflect on the hype cycle because it sits in somewhat problematic contrast to the actual study of history. Many philosophers and writers have suggested that history does move in cycles, but there’s nothing measurable or historically objective about this particular hype cycle. Whatever methodology produced it is handwavy and arbitrary, almost to the point of existing outside time. It tells a nice story but there’s not a lot of concrete information we can glean from it.

In the software industry, we don’t talk much about history, despite the fact that the tools and technologies that we use are heavily influenced by historical accidents and contingencies. We tend to think that technology exists for a reason—that the problems it solves are objective, extant things out there in the world. But I want to push back and question that viewpoint.

Is it possible that many of the things we take for granted in software are just arbitrary quirks arising from a particular road that the industry has gone down?

1995

The first commercially successful object-relational mapping framework was TOPLink, a Smalltalk product which was later ported to an early version of Java. TOPLink implemented what we’d call the data mapper pattern in today’s parlance. Its main competitor was another Smalltalk framework, ParcPlace ObjectLens, which was probably where these ideas originated. Other interesting frameworks and libraries of the time took quite a different approach. Rogue Wave DBTools in C++ overloaded the standard streams API to project SQL queries onto objects, while the early NeXT operating system provided DbKit, which introduced the now standard concept of a database abstraction layer.

IBM also came to the party with its own framework called ObjectExtender, part of the VisualAge Smalltalk product. At this point I could segue into the weird story of how IBM and Sun all but killed Smalltalk in the late 1990s, but although that is fascinating and probably relevant, it’s kind of a tangent away from what I want to focus on, which is the broader technological context of the time.

Object-relational mapping emerged in the context of two significant IT trends.

As the cost of commodity hardware plummeted from the mid 1980s onwards, the industry shifted away from mainframes, towards mass deployment of GUI applications based on a client-server model. But the business world at the time was still utterly reliant on the mainframes, and the relational databases that had emerged in the 1970s and 1980s which stored huge repositories of persistent information. So one of the core responsibilities of this emerging class of ‘enterprise applications’ was to connect to these databases, unlocking the potential of software to automate tedious workflows and enable non-technical users to manage and manipulate the data.

Associated with this transition, object-oriented programming entered the industry with an unprecedented wave of hype, both commercial and theoretical. Objects were everywhere. They could model the real world directly in the computer’s memory. They could solve all the industry’s problems.

As the heavily-hyped ideals of object-oriented programming came into conflict with the well established and proven relational database technology, the concept of an ‘object-relational impedance mismatch’ emerged.

Object-relational impedance mismatch

The most important thing to recognise about this concept is that it’s a metaphor.

‘Impedance’ is a reference to the phenomenon of electrical impedance which is a measurable physical process. In contrast, the object-relational impedance is associative, suggestively framed with the gravitas of scientific rigour.

If we unpack its meaning, we get a set of conceptual, technical and cultural problems.

I want to explore the problems in each of these three areas individually, then tie it all together by fast forwarding back to 2015 to consider what we’ve learned from this particular tangent in software history.

Conceptual problems

The Structure of Scientific Revolutions by Thomas Kuhn is a book from the late 1960s which reshaped popular views of scientific progress. Most early descriptions of science viewed progress as cumulative, with new knowledge stacking neatly into the existing order. Kuhn showed that science actually proceeds chaotically, with different schools of thought unable to understand one another, and with revolutionary, discontinuous ruptures in the the scientific tradition leading to completely new ways of seeing the world. Famously, Kuhn introduced the concept of ‘paradigms’ to describe these particular scientific traditions.

I see Structure as a fairly problematic book. The concept of scientific revolutions is important and Kuhn gets it broadly right, which is itself a profound intellectual achievement. However, the book is structured around equivocating a philosophically precise definition of ‘paradigms’ with extraordinarily imprecise language. As a result, it doesn’t offer as much historical or meta-historical insight as it should, and over the years, has gained a more controversial relativist interpretation that implies the complete lack of universal scientific truth.

The concept of paradigms might be enervating, but it’s significant here because it’s how object-oriented programming and relational theory were framed during the 1990s. The object-relational impedance mismatch is often described as a consequence of trying to merge these two competing paradigms which cannot be reconciled, due to their completely different structures.

The object-oriented modelObjects encapsulating behaviour and data |

The relational modelTuples grouped into relations |

Obviously, these are overly simplistic definitions. I tried to make them as compact and essential as I could, so there are various missing details and caveats.

We can see straight away that both models—or paradigms if you like—are centered around nouns. They function as attempts to dictate how data in programs should be structured.

It’s also clear they both have extremely different views of the relationship of programs to time. The relational model deals with time implicitly and concretely in terms of ‘facts’, wheras a sense of time is almost entirely missing from the object-oriented model, a deep conceptual flaw that challenges the idea that objects should be the primary unit of organising software.

The incommensurability of the relational and object-oriented models has to do with representation. Objects hold references to other objects in memory but the possible associations between object types are bound when the code is written. Wheras, in the relational world, nothing is bound ahead of time. Relations are dynamically joined together from rows of data when queries are executed. Values in the relational model are always globally accessible.

In terms of accessing data by its identity, this is a fundamental divide. Object-oriented languages rely heavily on reference types and aliases. Structurally, objects are often composed from other objects, so the same object in-memory may appear as a reference in many different places. There’s no way of directly representing these nested structures in the relational model where values are atomic and rows are unique. Similarly, inheritance and polymorphism are core parts of most object-oriented systems, but have no counterpart in the relational world.

Object-relational mapping then, is an attempt to cross the divide through an additional layer of software which provides structure-preserving transformations between the two domains. We’ll look at this in more detail soon. For now, I want to highlight the particular assumption here that presupposes an object-oriented approach. That is, ORM is generally viewed as a solution from within the paradigm of OO.

When the idea of ORM emerged, it seems to have been viewed as a pragmatic compromise, rather than anything particularly revelatory or necessary. It’s worth mentioning the huge body of forgotten work from that time period on object databases and object-relational databases. The subtitle of one of the major books on this topic says it all: Object Relational DBMSs: The Next Great Wave, published in 1995.

It’s easy for us to laugh at this, but it wasn’t necessarily obvious then that object databases were not the next great wave, and that the ORM compromise would go on to become the dominant industry-wide approach to working with databases. Just like the demise of Smalltalk as the industry’s dominant OO language, this is another strangely fascinating story of 1990s vision and technology hubris, but unfortunately, it’s beyond the scope what I’m able to cover here.

Classes vs tables

So we know there are significant conceptual differences between the relational and object-oriented models. But this can be said of many different aspects of computing. Are these differences enough to justify the severity and profundity of the language we use to describe their incompatibility?

A good way to explore this question is to compare the theoretical problems with these mismatching models to the actual implementation problems that arise in practice.

Perhaps I’m guilty of a little hindsight bias here. Multi-paradigm programming wasn’t obvious in the 1990s, but it’s the standard way of doing things today.

What’s interesting is that when you get down to the details of ORM in practice, you see another set of concerns start to emerge, more to do with the skew of technology towards modelling implementation details rather than ideas.

It’s rare to find any so-called OO system that’s actually based on pure object principles. Instead, most systems are mashups of general-purpose data structures like arrays, hash tables and strings, with classes acting as building blocks for slotting together chunks of procedural code.

Looking at relational database technology, we see a similar divergence between theory and practice. SQL is not the pure relational model. The implementation of modern databases diverges from the original theory in significant ways. Standards are ugly and sprawling, replete with vendor-specific political compromises.

From this perspective, we can look at the impedance mismatch in a more mundane light, as simply a technical problem of mapping the implementation detail of classes to the implementation detail of tables, with the sub-problem of managing the complexity of interfacing with the database by constructing SQL strings.

The reality of industry practice suggests a very different set of concerns than the traditional formulation of the impedance mismatch. It’s possible that a huge chunk of the problems of ORM arise as a consequence of the flawed representation of SQL queries as strings rather than composable data types based on predicate calculus.

Consider the following thought experiement: Could you implement the relational model in pure OO? Conversely, could you implement an OO model with the relational model? How would you do it?

Technical Problems

The closest thing to a canonical set of ORM patterns is found in Martin Fowler’s book Patterns of Enterprise Application Architecture from 2003. Today, the analogy of the database as ‘crazy aunt shut up in the attic’ seems a bit contemptuous and unkind. At the time, it served as a hyperbolic way of underscoring the motivation for developers to work entirely within the bounds of their favoured programming language, avoiding too much direct contact with the underlying data storage layer.

It’s often said that naming things is one of the most difficult problems in computing, which is one of the reasons why this book was so influential. Here, for the first time, the tribal knowledge and industry folklore around ORM was presented in a well organised way, with specific sections devoted to explaining various proven strategies along with information about their use cases and tradeoffs.

From this, we can distill a core set of conventional ORM features (it’s worth pointing out that many of these features were apparently present early on in the TOPLink and ObjectLens products, though it’s unclear how usable these products were in relation to frameworks like Hibernate and Rails):

- Two-way mapping between object properties and database columns

- Mapping 1-1, 1-n, n-m associations between objects to relationships between tables

- Lazy loading (proxy)

- Object caching (identity map)

Various strategies for implementing these features are detailed in P of EAA.

There are three core architectural patterns which slice up this problem in slightly different ways:

- Row/Table gateway

- Data mapper

- Active record

In turn, there are various meta strategies for automatically constructing these mappings and reducing boilerplate (note that these aren’t necessarily mutually exclusive):

- Code generation

- Metadata mapping

- Schema inference

These patterns are frequently criticised for their complexity and intricacy. In exchange for huge productivity gains, developers are weighed with the abstraction penalty of having relatively simple objects poking out above the surface like an iceberg with an enormous hulk of submerged detail underneath.

The Vietnam of computer science

By the mid-2000s, the industry was mired in a trough of disillusionment around the ORM problem, a consequence of a legacy of complicated and expensive technological failures. Ted Neward’s infamous analogy to the Vietnam war expresses the sense of frustration at the time: “Object-relational mapping is the Vietnam of computer science. It represents a quagmire which starts well, gets more complicated as time passes, and before long entraps its users in a commitment that has no clear demarcation point, no clear win conditions, and no clear exit strategy.”

Around the same time as these discussions were taking place, David Heinemeier Hansson launched Ruby on Rails. Over the next two years, Rails grew to become the canonical implementation of the active record pattern. DHH’s aggressive marketing of the framework exerted a controversial influence on the software industry, leading a shift away from complex and impenetrable complexity in software frameworks, towards designs optimised for clarity and productivity.

One of the major selling points of Rails was the way it extended the traditional active record pattern to include relationships between objects in addition to the basic class-table mapping that forms the core of the pattern.

class Team < ActiveRecord::Base

belongs_to :club

has_many :players

end

This level of expressiveness and minimalism was unprecedented at the time.

I also want to highlight a smaller and more subtle feature in Rails that enabled the convention-based magic of active record to work.

Inflector.pluralize("customer")

# => "customers"

Inflector.singularize("people")

# => "person"

As far as I know, this API design originated in a library on CPAN written by Damien Conway. It’s not often talked about, but this method of mapping back and forth between singular and plural representations of identifiers was one of the key innovations of Rails. By automating this convention, Rails was able to radically simplify the amount of manual code required to connect database tables, class names, and URL routes, without sacrificing English readability.

The success of Rails led to an explosion of similar web frameworks and active record implementations in various other languages.

The cumulative effect of this runaway success is that the use of ORM (and active record in particular) has attained the status of conventional wisdom: an idea that is widely held but largely unexamined.

The design of Rails is deliberately slanted towards supporting CRUD (Create-Read-Update-Delete), which is an ideal use case for the active record pattern. In the early days of Rails, it made sense to conflate various data modelling concerns into the single concept of classes inheriting from the active record base. If Rails had launched with separate concepts and a separate directory structure for persistence and domain modelling, I’m fairly sure it wouldn’t have taken off in quite the same way.

But gluing data persistence and model logic together comes at a cost. When it comes to managing the growth of Rails applications, particularly those that have intricate business logic spread over a large set of features and capabilities, the framework defaults suffer from a kind of metaphor shear where the concept of ‘models’ directly mapping to database tables is overshadowed by the variety and complexity of business logic and domain model concepts.

Oftentimes, developers lack the experience and subtlety to recognise these problems—or else they’re deliberately focusing on GettingShitDone™ at the expense of architecture. The results are all variations of the fabled (now clichéd) Rails application with the 3,000 line User class.

“Most Rails developers do not write object-oriented Ruby code. They write MVC-oriented Ruby code by putting models and controllers in the expected locations.”

—Five Common Rails Mistakes, Mike Perham

There’s a huge amount of confusion and misinformation on this topic, so I want to clarify a few things. First of all, the purpose and scope of building a domain model is not well understood in the industry, particularly in the context of Rails applications. The original P of EAA book, explains the domain model concept by explicitly referencing Domain Driven Design by Eric Evans, and frames it as an alternative to active record. Active record is positioned as a tool for simple applications where the database schema drives a lot of behaviour. Building a domain model is an expensive and risky undertaking—it’s recommended only in situations where there’s a payoff in terms of the value that the modelling provides in simplifying complex and interconnected business problems.

The concept of a model in MVC is not the same as the concept of a domain model. They are two entirely different contexts, at a different level of granularity, with different forces and consequences. When active record is used as the ‘M’ in MVC, it becomes very difficult to tease these separate things apart.

The way I usually like to explain this is to ask the question: what is a model?

Is it a record class? A record object? Or is it an abstract composition of concepts that represents the meaningful (‘real world’) content of a software system?

A good way to summarize this is to consider what’s implied by the default directory structure within Rails and many other frameworks. Why do we have app/models and not app/model? What does this say about the way we model things in our applications?

There is nothing to stop anyone from writing object-oriented Rails code, but people don’t generally do it because—as a sort of behavioural economics effect—the defaults of the tool shape the way the tool is used. People tend to follow the path of least resistance, particularly when they’re in a rush or under pressure. Also, building simple and powerful domain models is really hard.

This leads me back to the way that the ORM problem has been framed technically.

Reflecting on the accumulated industry knowledge of the past decade, we know that large monolithic applications de-compose fairly rigorously into constellations of distributed services and external API integrations. This gets to the essence of the metaphor shear. We have so many frameworks and application architectures based around the specific problem of mapping to and from databases. But in practice, we need mappings between all kinds of different objects and data sources. In these situations, the database is just one of many concerns.

Cultural problems

What insights can we gain from a reactionary, conservative historical vision from the 1920s that’s resurfacing in various problematic ways today? It might be hyperbolic, but I think it’s kind of interesting to contrast a famous ‘paradigm shift’ from the study of humanities with the sort of verbiage we get in the software industry with things like the hype cycle. We can find interesting similarities as well as differences.

There are many things wrong with such an absolutist view of social reality, but the idea of dynamic creative cultures morphing into insincere civilizations as a tragic comedy still resonates on many levels.

To understand the specific cultural forces that shaped the rise of ORM technology, we need to go back to 1995, and examine the predominant mythologies and workplace dynamics of the software industry back then.

Earlier, I mentioned the context of the old mainframes being slowly phased out, but large organisations being utterly reliant on the massive relational databases, spinning away behind the scenes.

In these conditions, a certain subculture of software professionals had a great deal of power. DBAs, data modellers, analysts—relational database professionals as a whole—exerted a dictatorial pressure on technology choices and the direction and planning of software projects. The prevailing approach to design was to model the database schema first, locking it down with a specification, then passing it on waterfall-style to the team of programmers who were tasked with building the actual software.

Many data professionals were extremely cynical about object-oriented programming and resistant to change. OO was seen as a temporary fad, and not worthy of serious consideration.

At the same time, a new subculture was forming, centered around object-oriented design and the wisdom gained from more than a decade of Smalltalk and C++ being used in production systems. The object-obsessed movement was equally dogmatic and absolutist as the database professionals but they could be quite open about their biases and preferences. Phrases like ‘object zealot’ and ‘object bigot’ did not come from their detractors, but from the object crowd themselves.

The ‘impedance mismatch’ is as much a product of this cultural divide as it is an actual technical or conceptual problem. Framing the mapping problem as one of translating between paradigms that could not be reconciled in their own terms allowed the object people to stick with their objects and the data people to stick with their databases. The lack of native SQL data types in the Java standard libraries meant that strings became the de-facto API for communicating between these technologies, so neither side had to compromise. Other languages and platforms followed suit. It wasn’t until Microsoft released LINQ in 2008 that a reasonable solution for this lack of structure was marketed at the mass audience of developers.

Another way of looking at it is that the early industry subcultures were a product of dysfunctional corporate politics that reframed software development as an assembly line rather than a creative and imaginative activity.

The context I’ve been talking about here is centered on the 1990s, but the trajectory of these arguments extended well into the next decade too.

We’ve already discussed the technical influence of Rails here, but it wasn’t just the technical ideas and design of Rails that were important. The growth of Rails in 2006 and onwards also had a significant cultural influence which focused critical attention on a lot of the shoddy and destructive practices of the corporate software industry.

Such acerbic criticism had its upsides and its downsides. Fighting for simplicity in software was a worthy and laudable goal, but such sweeping change was accompanied by a great deal of cognitive dissonance and clashes of values at a more personal level.

Fuck complexityFuck bureacracyFuck JavaFuck the databaseFuck you? |

Back to the future

So where are we today? And what have we learned?

Relational databases won

The object-relational and object database paradigm never succeeded. Today, there are more alternative database technologies than ever before, but we see relational databases at the heart of of the world’s largest and most successful businesses, and powering millions of web applications.

Object-oriented programming won

Object-oriented programming wasn’t a temporary fad. It’s the dominant feature in almost all widely used programming languages in existence today.

Large applications are multi-paradigm

We now talk about things like ‘polyglot programming’ and ‘polyglot persistence’ in ways that would have been unthinkable 10-15 years ago. Mainstream object-oriented languages have significant influences from functional languages, and the legacy of Smalltalk innovation is much better understood.

Objects are not the primary unit of application design

Loosely distributed systems in the form of RESTful APIs and service oriented architectures have had a huge influence on the way that applications are designed with microservices and bounded contexts, which have replaced class diagrams and object models in most current development contexts. We have a much better understanding of the flaws and limitations of object-oriented programming. It has become more socially acceptable to adapt our thinking and tools to different problem spaces, rather than force everything in our systems to conform to an absolutist vision.

Precision designs are fragile

Obsessing over the lifecycle of persistent objects leads to overcomplicated precision designs that have a tendency to be brittle and difficult to modify. The counter-intuitive secret of domain driven design is that it’s not actually about accurately modelling the real world. Instead, it’s about evolving systems by breaking complex problems down into simple components that can be more easily adapted or replaced when requirements change.

Argument cultures harm creativity

Finally, I want to quickly mention the relationship between argument cultures, unregulated aggression and adversarial masculinity, which seems to have been the root cause of a lot of problems in this space. By recognising and promoting the importance of a creative, respectful, thoughtful culture, we can encourage different ways of solving problems rather than shutting them down.

The way forward is to question our assumptions.

Object-relational mapping

Given what we now know about the history and context of object-relational mapping, we’re ready to challenge the conventional wisdom.

This is not to say that mapping between databases and objects is a solved problem or a dead-end in software. What we should challenge is the absolute centrality of this problem in our software tools and frameworks. To a certain extent, the object part and the relational part are just implementation details.

Which leaves us with the concept of simply mapping things.