Archive Snapshots

Now that I’ve spent a bit of time working on this, I’m starting to have lots of ideas for improving content modelling, publishing workflows and graphic design. But before I get carried away with all that, I want to sketch out a high level plan for getting the existing content and archives under control and making sure everything there is in a baseline state of being readable.

First, I go through the table of content types and pick out anything that needs specific attention in terms of snapshotting CSS and JS for archive pages. This is weird stuff that mostly relates to the history of this website, but it can be captured in a fairly concise order (really the thing I’m looking to show here is how to use text documents to very quickly wrap-up and drive these sorts of refactoring decisions without getting mired in complexity and housekeeping issues).

| Content type | CSS era | JS dependencies |

|---|---|---|

| essay | 2010, 2014, 2018, 2021 | jQuery (2.1), D3 (3.5), chart.js (2.6), loess, bigfoot |

| transcript | 2015, 2018 | D3 (3.5) |

| entries | 2004, 2010 |

The entries are a new content type I’ve created by pulling out old journal posts that were encapsulated in the former ‘information apocalypse’ and ‘antic disposition’ notebooks. I want to re-render most of this old content as static HTML dumps with original CSS/aesthetics and just leave them there. Not a big deal either way, but it feels wasteful and lingering to pump them through the publishing and site generation routine over and over again, forcing their text into 2021 era templates.

I also see that the essays have a lot of JavaScript that I do not want to think about or touch ever again. This is mainly stuff tied up in a very old version of D3 from long before Babel, module bundling, or ES imports were a thing in the frontend world.

So this seems like pretty solid justification for changes to the content model and static site generator to better support archived content. Annoying, but it has to be done.

Investigating the legacy mess

To avoid getting stuck in long winding refactoring journeys with the old stuff (instead of writing new stuff), I should put a few barriers and constraints in place to stay focused on solving the actual problem (snapshotting old webpages), rather than exploding this into more of a design process than is needed.

The approach that feels most comfortable for the future of this site is to organise everything around the CSS and HTML snapshots, since that is where the most complex dependencies sit.

When most of these essays and presentations were developed, the site was compiling HTML, images and UI assets from separate sources, pushing them into static URLs under /css, /img and /js which were linked to randomly and chaotically from within the HTML content.

The missing essay

There’s an additional challenge, in that I want to bring over Land of the Wrong White Clowns published on Medium in 2015 which got a huge amount of attention and fits in perfectly with the ethos of the back catalog here. In order to make that work on the site, I need to convert the HTML and decide which parts of Medium’s text styling can be replicated in the archive template.

Thinking about what to do here makes me realise the answer to snapshotting was sitting there in the background all along.

Wayback machine

In 2018, it looks like I replaced a lot of old flexbox and Sass complexity with a simpler and leaner CSS foundation so a bunch of the essays and transcripts will work fine, they can use a single shared archive stylesheet and JS bundle.

Everything else will need to have individual bundles made up. This also offers the opportunity to do weirder and more interesting stuff. With Wrong White Clowns, it means getting the original text from Medium as it was in 2015, rather than now. With some of the more ancient writing, it means grabbing site-specific CSS and HTML that is gone from the newer versions of the site (particularly stuff that was written with the long-forgotten PHP CMS and Mysql database).

Avoiding changes to the generation pipeline

Since there’s already a lot of frontend wrangling needed here, it seems best to avoid making any changes to the background content management system. Hopefully I can do most of this by changing a few URLs with minimal templating and CSS changes.

Content type changes might be necessary. Essays and transcripts will stay the same but changes to metadata fields are required to denote whether something is archived or not. And I’m gonna need to rip the old entries out of notes and snapshot them into some sort of legacy blog container.

So I can’t avoid some changes, but I want to keep them as small and non-intrusive as possible. What I’m doing here is trying to avoid any big entanglements with legacy content stuff and the new generation of the site, ensuring all of this runs seamlessly under the old site generator that is already working.

Fixing the Essays

Because of various dynamic charts and data things, whatever I do, I will have to go through each essay one by one and test in browsers, so it makes sense to draw up a chronological list and crawl through it step by step.

One of my criticisms of agile bullshit is that the processes and rituals so often lead people away from fast and effective approaches like this. Sometimes, software managers with zero experience in media and publishing just need to step back and let people with deep trade skills smash out a job manually to a high standard without tracking every tiny increment of work in full-stack ‘tickets’ or ‘stories’.

It’s at this point I realise I’ve forgotten that a big chunk of the work is probably going to involve checking for broken links. Otherwise, what does fixing mean? What can I do to descope and simplify?

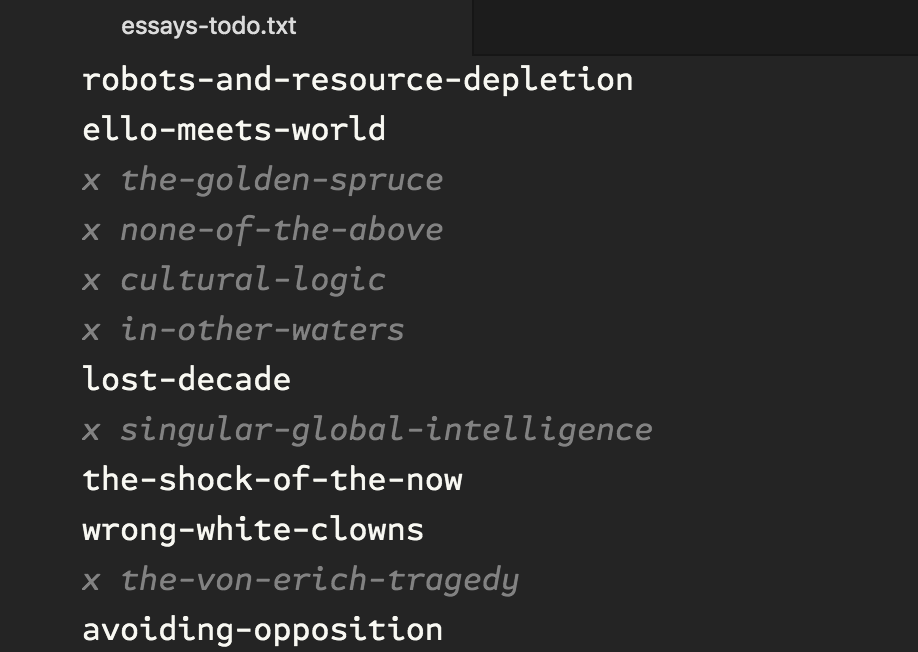

A fast way to make a todo list is to dump each essay to a line in a text file that can be checked off. I have various syntaxes in my text editors that provide lots of different options for these plain todo lists. One of the simplest line-oriented formats is shown below:

$ ruby -r pathname -e 'Dir["content/essays/*"].each { |d| puts Pathname.new(d).basename }' > essays-todo.txt

Yes, my main use for Ruby is as a practical extraction and reporting language but writing a script is overkill for this task, hence the one-off command to file redirection.

Now I can go through and check off each item one by one and see what problems I run into. I do everything that doesn’t require any special attention to JavaScript or content styling first, keeping the site generator running and continuously testing in browser tabs as I go.

The only change needed so far is the following CSS, a hack that took no more than a couple of minutes to write and test. It’s more complex and ad-hoc than the minimal reset I started with but roughly represents about the bare minimum I can handle before the typography feels rank and intolerably shitty.

.essay {

margin-left: 1em;

}

.essay-column {

font-size: 22px;

max-width: 960px;

}

.essay-cover {

margin: 96px 1em;

}

.essay-title h1 {

font-size: 48px;

margin: 0;

}

.essay-title p {

margin: 0;

font-size: 36px;

}

From here, everything else is going to need standalone attention to fix broken JavaScript and handle unique editorial features in the HTML.

First step is to concatenate static bundles from the dependencies used by each individual essay from 2014. This isn’t the most performant or reusable approach but it’s more robust and resilient to future changes to store all this article-specific JS alongside the archived content. Once generated, it shouldn’t need to be touched or moved around again. It’s kept completely separate from JS used in other parts of the website.

There’s already an old JS minifier—terser—installed in the project so I’ll give that a go first. This is one of those situations that’s probably right on the borderline for whether or not I’d choose to script it or run the commands manually. Leaning towards a one-off script here because it’s easier to document and it feels like manually running the command multiple times is going to be slower and more error prone than writing as copypasta in a code file that can be carefully checked and edited before running once.

First, I set up the skeleton of a Rake task with the outputs I want for each command:

task :archive_bundles do

sh "npx terser [input] > content/essays/robots-and-resource-depletion/bundle.js"

sh "npx terser [input] > content/essays/avoiding-opposition/bundle.js"

sh "npx terser [input] > content/talks/narrative-anxiety/bundle.js"

end

To get this working I need to replace the [input] markers with the dependencies I documented in the table at the start of this process.

task :archive_bundles do

robots = ["d3.v3.js", "loess.js"].map { |s| "interface/scripts/#{s}" }

avoiding = ["jquery-2.1.3.js", "bigfoot.plugin.js", "d3.v3.js"].map { |s| "interface/scripts/#{s}" }

sh "npx terser #{robots.join(' ')} > content/essays/robots-and-resource-depletion/bundle.js"

sh "npx terser #{avoiding.join(' ')} > content/essays/avoiding-opposition/bundle.js"

sh "npx terser interface/scripts/d3.v3.js > content/talks/narrative-anxiety/bundle.js"

end

Now I can go through each source file, remove legacy script references and move the embeded data scripting into a separate main.js file, wrapped in a DOMContentLoaded event listener. Then, at the bottom of each content file, I link to the permanent snapshotted JavaScript dependencies before testing:

<script src="bundle.js"></script>

<script src="main.js"></script>

It worked first time, but testing reveals a few blowouts to the charts which seems related to missing CSS.

But at least the JS mess is tidied up, trading off a few extra downloads and less shared caching for never having to touch these files again as part of the main site build process. It was more straightforward and less meandering than I had first feared (writing these notes about the process took a lot longer than actually doing it).

So now it’s time to fix the remaining glitches, snapshot the Wayback machine CSS from different eras of the site and look at handling archive templates.